Fraud Article

Navigating AI compliance in 2025: Key insights from Veriff’s webinar

AI is reshaping industries at an unprecedented pace, but with innovation comes greater regulatory challenges. How can businesses stay ahead of evolving compliance requirements like the EU AI Act? In Veriff’s latest webinar, top industry experts break down what you need to know about navigating the complex world of AI regulations and their global impact.

As artificial intelligence (AI) continues to transform industries worldwide, businesses face increasing regulatory scrutiny. In Veriff’s latest webinar, industry experts Chris Hooper (Director of Brand, Veriff), Tea Park (Attorney at Law, NJORD Law Firm), and Jeremy Joly (Co-founder and CPO of Spektr) discussed the evolving compliance landscape, focusing on the EU AI Act and its global implications.

This article summarizes the key takeaways to help businesses navigate AI compliance in 2025, with practical guidance on meeting EU AI Act requirements, GDPR alignment, and high-risk AI system obligations.

Understanding the EU AI Act and its global impact

The EU AI Act is one of the most significant regulatory frameworks governing AI technologies. With a broad scope, it applies to:

- Companies based in the European Union

- Any organization providing AI-driven services or products to EU users

Key definitions under the Act

- AI System – A machine-based system designed to operate with varying levels of autonomy and adaptiveness, using inputs to generate outputs such as predictions, content, recommendations, or decisions that influence physical or virtual environments.

- Provider – The entity developing an AI system.

- Deployer – An organization implementing an AI system in its operations.

- Importer – A company bringing AI solutions developed outside the EU into the region.

- Distributor – A business that resells or facilitates AI solutions in the market.

Understanding where your business fits within this framework is essential for compliance.

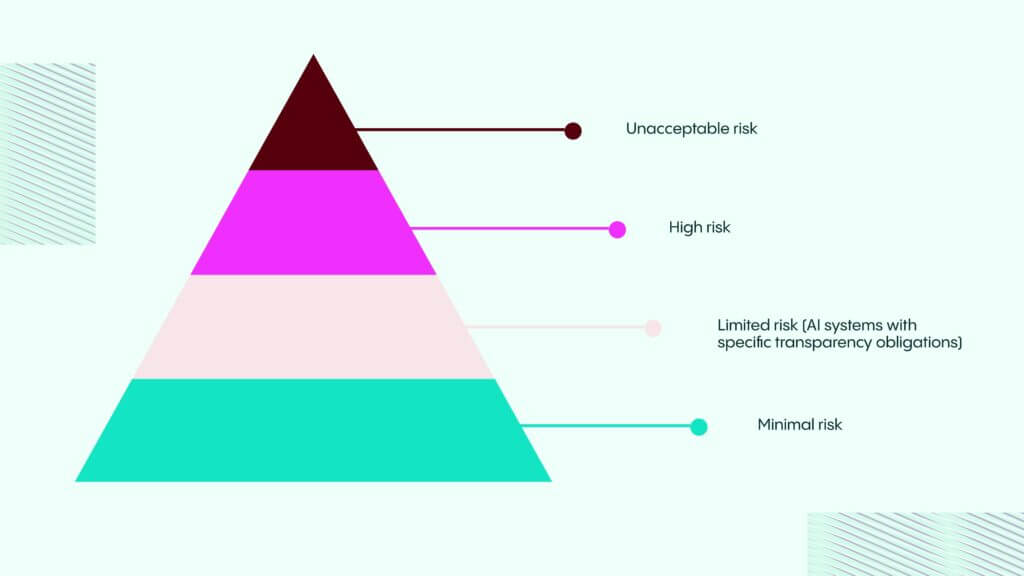

AI risk classification: What businesses need to know

One of the most critical aspects of the EU AI Act is its risk-based approach, which classifies AI systems into four categories:

Unacceptable risk (strictly prohibited)

These AI applications are banned due to their potential harm to individuals and society. Examples include:

- Social scoring systems

- AI used for predictive policing

- Real-time remote biometric identification (except in law enforcement with strict safeguards)

High-risk AI systems (extensive compliance obligations)

Businesses must implement stringent documentation, risk management, and human oversight for high-risk AI systems, including applications in:

- Healthcare (e.g., AI-assisted diagnosis)

- Finance (e.g., credit scoring, fraud detection)

- Education and employment (e.g., AI-driven hiring assessments)

- Limited-risk AI systems (transparency obligations)

- AI applications in this category require transparency measures, such as user notifications when interacting with AI-generated content. Examples:

- Chatbots

- AI-generated media and deepfakes

Limited-risk AI systems (transparency obligations)

AI applications in this category require transparency measures, such as user notifications when interacting with AI-generated content. Examples:

- Chatbots

- AI-generated media and deepfakes

Minimal risk AI systems (best practice recommendations)

These AI applications have no major compliance obligations but are encouraged to follow best practices for responsible AI use.

Key dates to watch for AI compliance

- February 2, 2025 – General EU AI Act compliance deadline

- August 2, 2025 – Implementation of high-risk AI system obligations

- August 2, 2026 – Stricter enforcement of compliance requirements

- August 2, 2027 – Full-scale regulatory oversight comes into effect

Key compliance steps for businesses (EU AI Act & GDPR)

Conduct an AI audit

Start by identifying all AI-driven processes within your organization and classifying them according to the EU AI Act’s risk categories: Unacceptable, High-Risk, Limited, or Minimal. Once categorized, assess any compliance gaps to ensure you meet the necessary regulations without risking over-compliance or falling short of requirements.

Align with GDPR and data protection regulations

AI systems frequently handle personal data, making GDPR compliance essential. To ensure this, organizations should adopt privacy-enhancing techniques such as data anonymization and minimization to reduce exposure to personal information, synthetic data generation to train AI models without using real user data, and encryption alongside secure data handling to protect sensitive information. Additionally, adherence to lawful processing principles, including transparency and respecting data subject rights, is vital for maintaining compliance and building trust.

Establish human oversight mechanisms

The EU AI Act requires “meaningful human intervention” in AI-driven decisions, emphasizing the importance of maintaining human oversight in high-risk applications. To comply, organizations are encouraged to appoint AI compliance officers or establish AI governance teams to oversee implementation and adherence to regulations. Additionally, it is crucial to ensure that humans retain the final decision-making authority in scenarios where AI poses significant risks. Regular testing of AI models for bias, fairness, and accountability is also essential to align with the Act’s standards and promote ethical AI practices.

Strengthen third-party AI vendor compliance

If your AI solutions depend on external vendors or APIs, make sure contracts clearly outline compliance responsibilities, verify that AI providers adhere to the EU AI Act and GDPR standards, and conduct regular audits and impact assessments of third-party systems.

Stay updated on AI regulatory changes

AI regulations are changing quickly, and businesses need to stay ahead. It’s important to track updates to the EU AI Act and other regional policies, engage in industry discussions and regulatory forums, and participate in AI ethics groups. As new rules are introduced, companies should adapt their AI governance frameworks to remain compliant.

AI Compliance Guide – Webinar

Stay ahead of AI regulations with our webinar covering compliance frameworks, updates, and best practices—watch on-demand now!

Final thoughts: Preparing for the future of AI compliance

As AI regulations take shape, businesses must proactively prepare for compliance, rather than react to penalties. With strategic planning, clear documentation, and a focus on ethical AI deployment, organizations can stay ahead of regulatory challenges while leveraging AI for innovation.

Veriff’s webinar emphasized the importance of transparency, accountability, and adaptability in AI governance. By following these insights, businesses can ensure compliance and build trust with customers and regulators alike.

Recommended AI guidelines for businesses:

- EU Commission’s “Guidelines on AI System Definition”

Read the official guidelines - EU AI Office’s “Living Repository of AI Literacy Practices”

Explore the AI literacy repository - European Data Protection Board’s “Opinion 28/2024 on AI and Data Protection”

Read the EDPB Opinion - EU Commission’s “Guidelines on Prohibited AI Practices”

Read the official guidelines